How to Organize and Write Effective Test Cases

Great test cases are like good directions: short, clear, and proven to get you where you need to go. They help teams move faster, catch risk earlier, and make quality visible. Here’s a simple, practical guide to organizing and writing test cases that teams actually use.

Why test cases matter (even in an agile world)

- Consistency: Everyone validates the same behavior the same way.

- Traceability: You can show exactly which requirements and risks are covered.

- Onboarding: New teammates learn the system by reading intent, not guesswork.

- Automation-ready: Clear cases are easy to convert into scripts later.

Core principles of effective test cases

- One purpose per case – Keep each case atomic. If one check fails, the intent fails.

- Deterministic outcomes – Use precise expected results; avoid “it should look OK.”

- Traceable to requirements and risk – Every case should answer “why does this exist?”

- Reusable and maintainable – Prefer data placeholders and stable identifiers; avoid UI noise.

- Balanced coverage – Include happy, edge, and negative scenarios.

- Easy to find – Predictable names, tags, and foldering matter as much as the content.

Prepare before you write

- Clarify the requirement: preconditions, acceptance criteria, and constraints.

- Define the risk: what’s the worst that can happen if this breaks?

- List key variations: data types, states, roles, devices, and integrations.

- Decide scope: what belongs in this case vs. a separate one?

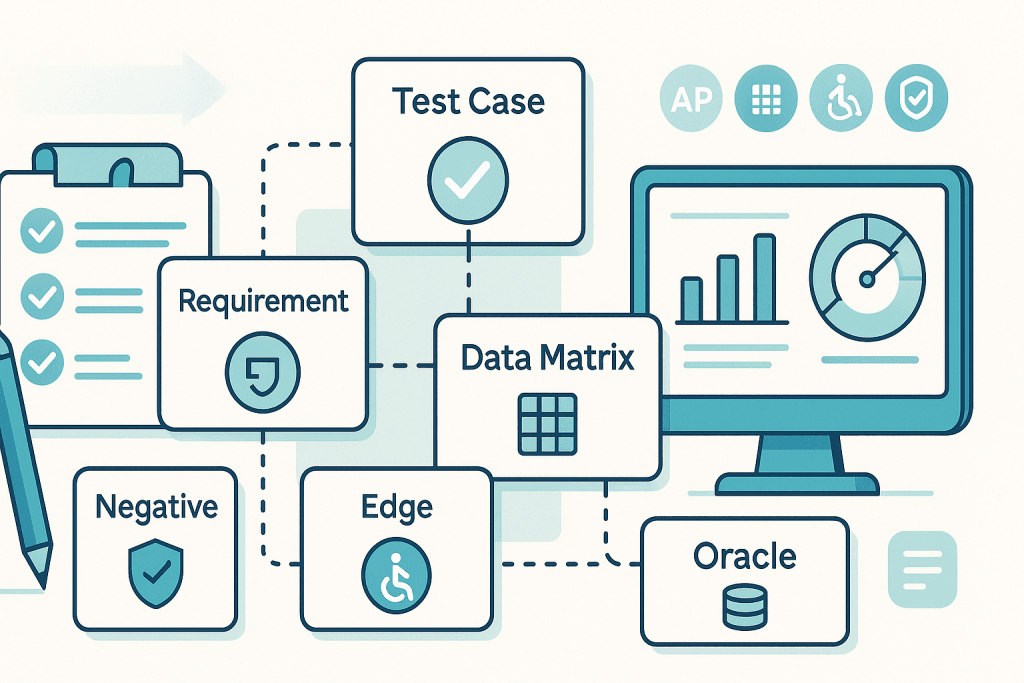

What a good test case contains (in plain words)

- Title: Action + object (e.g., “Refund the full amount to the original card”).

- Purpose: A single sentence explaining what the case proves.

- Links: Requirement IDs, user story, risk tag, release.

- Preconditions: The world that must exist before you start (e.g., a captured payment).

- Test data: Inputs and states you’ll use; note any special values.

- Steps: Short, numbered, and action-focused.

- Expected results: Verifiable outcomes after each key step.

- Oracles: How you’ll know it truly worked—UI, API responses, logs, database changes, emails, etc.

- Postconditions: Cleanup or resets.

- Notes for automation (optional): Hints like stable IDs or endpoints.

Keep steps under 8 if possible. If it gets long or covers multiple intents, split it.

Naming, IDs, and tagging that scale

- IDs: Area-Feature-Sequence (e.g.,

PAY-REF-001). - Titles: Start with the verb (Create, Edit, Refund, Cancel…).

- Tags: Use a small, stable set—

@ui,@api,@p1,@smoke,@a11y,@security,@negative. - Suites: Group by product area and keep dedicated suites for Smoke, Regression, Exploratory, and Non-Functional.

Data and variations: cover what actually breaks

- Equivalence classes: What inputs behave the same? Test one from each class.

- Boundaries: Minimum, maximum, just below, just above.

- Combinations: When inputs explode, use pairwise/orthogonal sets rather than every permutation.

- States and transitions: Not just data—consider lifecycle states and forbidden moves.

- Negative paths: Invalid credentials, expired tokens, network hiccups, permission denials.

Oracles: look beyond the UI

A pass isn’t just a pretty screen. Strengthen your verdict with additional checks:

- API: status codes, response bodies, headers.

- Database: rows inserted/updated with exact values.

- Events/Queues: message shape and delivery.

- External effects: emails, webhooks, ledger entries.

- Non-functional signals: accessibility violations, security headers, basic performance thresholds.

Organizing your repository

- Foldering: Product → Feature → Capability (e.g., Checkout / Payments / Refunds).

- Suites: Keep Smoke, Regression, Exploratory, and NFRs as top-level collections.

- Versioning: Mark which release introduced/changed a case; deprecate instead of duplicating.

- Searchability: Standardize titles and tags so the same search always finds the right set.

Review checklist (use before approving a test)

- Single, clear purpose?

- Linked to requirement(s) and risk?

- Preconditions explicit and realistic?

- Steps short, imperative, and unambiguous?

- Expected results measurable and deterministic?

- Edge and negative scenarios represented across the suite?

- Data parameterized or clearly listed?

- Oracles include at least one non-UI check where relevant?

- No duplicates of existing cases?

Prioritizing what to run

- Risk-based first: High-impact, high-likelihood failures go to the top.

- Change-based selection: Run the tests that touch changed code, contracts, or dependencies.

- Smoke vs. Regression: Keep a tiny smoke set for fast feedback; run broader regression on merges and nightly.

- Stability watch: Track flaky cases and fix or quarantine promptly.

Metrics that actually help

- Authoring lead time: Draft → approved.

- Coverage by risk and requirement: What’s actually protected?

- Defect detection effectiveness: Pre-prod vs. post-prod defects.

- Automation stability: Flake rate and mean time to fix.

- Case churn: Adds/edits/deprecations—too high means volatility; too low may mean stagnation.

Common pitfalls (and simple fixes)

- Mega-cases that do everything → Split by intent; reference shared data.

- Vague expectations → Replace “should” with specific, observable results.

- UI-only checks → Add API/database oracles to prove real outcomes.

- Copy-paste variants → Use data variations under one intent, not clones.

- Ignoring cleanup → Define postconditions; keep environments healthy.

Accessibility, security, and performance: don’t forget the basics

- Accessibility: Keyboard navigation, focus order, alt text, ARIA roles, contrast.

- Security: Authorization, idempotency, sensitive data not exposed in logs.

- Performance: Simple thresholds on key endpoints (e.g., P95 response time under baseline).

These don’t need full separate suites to start—add a few lightweight checks where they naturally fit.

A simple adoption plan

- Pick one feature and write (or rewrite) 10–20 cases using the structure above.

- Tag with risk and map to requirements.

- Introduce review: one author, one approver.

- Pilot reporting: measure authoring time, coverage, and a small smoke set’s effectiveness.

- Expand carefully: add negative/edge matrices, then consider automation for the top P1 cases.

Final thought

Effective test cases are small, clear promises: “If we follow these steps, we’ll know whether the system truly works.” Keep them atomic, traceable, and rich in oracles. Organize them so anyone can find, trust, and reuse them. Do that, and your test library becomes an asset that speeds delivery—not a binder that gathers dust.